Poster

Poster

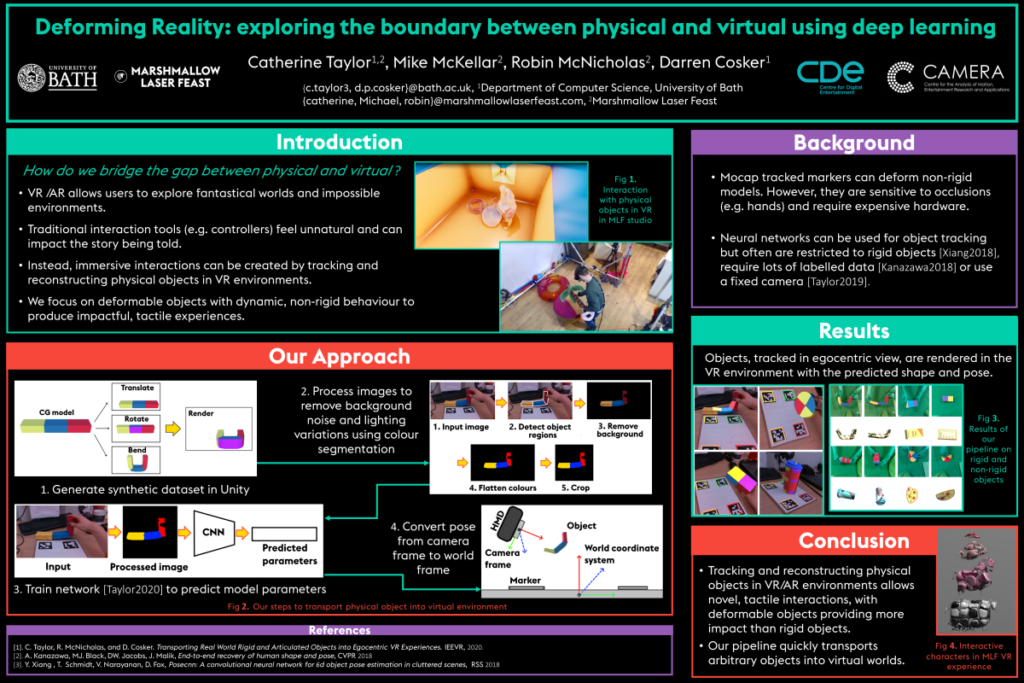

Deforming Reality: exploring the boundary between physical and virtual using deep learning

More details

Interactive performances and immersive theatre pieces which use virtual or augmented reality (VR/AR) transport individuals into fantastical virtual worlds and allow them to explore environments which would have otherwise been impossible. Marshmallow Laser Feast (MLF) uses such technology to create cutting edge experiences which bridge the gap between physical and virtual worlds. While the traditional means of interaction (i.e. controllers or hand gestures) provide a greater level of involvement in an immersive piece than passively watching the performance, they can feel unnatural and unintuitive, thus, preventing an individual being fully immersed in the virtual experience and potentially negatively impacting the story being told.

To overcome these limitations, research at the University of Bath and Marshmallow Laser Feast has focused on creating immersive and engaging interactions using VR Props. VR props are created by tracking and reconstructing the behaviour of physical objects in virtual worlds, allowing exciting tangible interactions in VR experiences. This novel tactile interaction provides new opportunities for immersive experiences and allows MLF to further reduce the gap between the physical and virtual worlds in their events. Motion capture systems are a potential solution to this task. A sparse number of points on the surface of an object are tracked and used to drive a rigged model or track a rigid object. However, these systems require costly non-standard hardware and are sensitive to marker drift, limiting their use in practise. Instead, we design a system which tracks both rigid and non-rigid object objects in a consumer-grade RGBD camera. This camera can be attached to a VR head mounted device, creating a dynamic capture volume. Our tracking pipeline combines traditional computer vision approaches with deep learning. We design a convolutional neural network which predicts the 6DoF rigid pose and, if appropriate, the non-rigid model parameters of a VR prop from a single RGB image. Additionally, we propose a synthetic training data generation strategy using Unity which allows arbitrary physical objects to be quickly and efficiently transported into virtual environments, without requiring a time-consuming manual capture process. We demonstrate our results for several rigid and non-rigid objects.

Catherine Taylor

BIO

Catherine Taylor is a final year EngD student in the Department of Computer Science at the University of Bath and a research Engineer for Marshmallow Laser Feast. Her research is focused on tracking and simulating deformable objects for virtual environments using deep learning. She has published papers on her research at ISMAR 2019 and WEVR 2020 as well as presenting posters at SIGGRAPH 2019 and IEEEVR 2020. Alongside her studies, Catherine has tutored courses in computer vision, C and Java. Before beginning her EngD, she completed a degree in mathematics from the University of Edinburgh. Catherine is passionate about the role computer vision and deep learning has to play in the future of immersive experiences.